@misc{taunyazov2021extended,

title={Extended Tactile Perception: Vibration Sensing through Tools and Grasped Objects},

author={Tasbolat Taunyazov and Luar Shui Song and Eugene Lim and Hian Hian See and David Lee and Benjamin C. K. Tee and Harold Soh},

year={2021},

eprint={2106.00489},

archivePrefix={arXiv},

primaryClass={cs.RO}}

Tactile Perception

The ability of humans to navigate our environment is heavily dependent on the quality and speed of sensing. Tactile sensing, or the sensation of touch and pressure, is especially important for both coarse and fine object manipulation tasks, such as handling parcels and writing. Humans utilize tactile sensing data in real time for muscle path planning, fast tactile perception, tacto-object recognition, and overall human mobility. Similarly, robots tasked with service activities that require interacting with humans or objects require fast and reliable tactile feedback.

In our lab, we are particularly interested in how perception with next-generation skin can be used to enhance physical human-robot interaction for trustworthy collaboration.

★ CLeAR Group Members

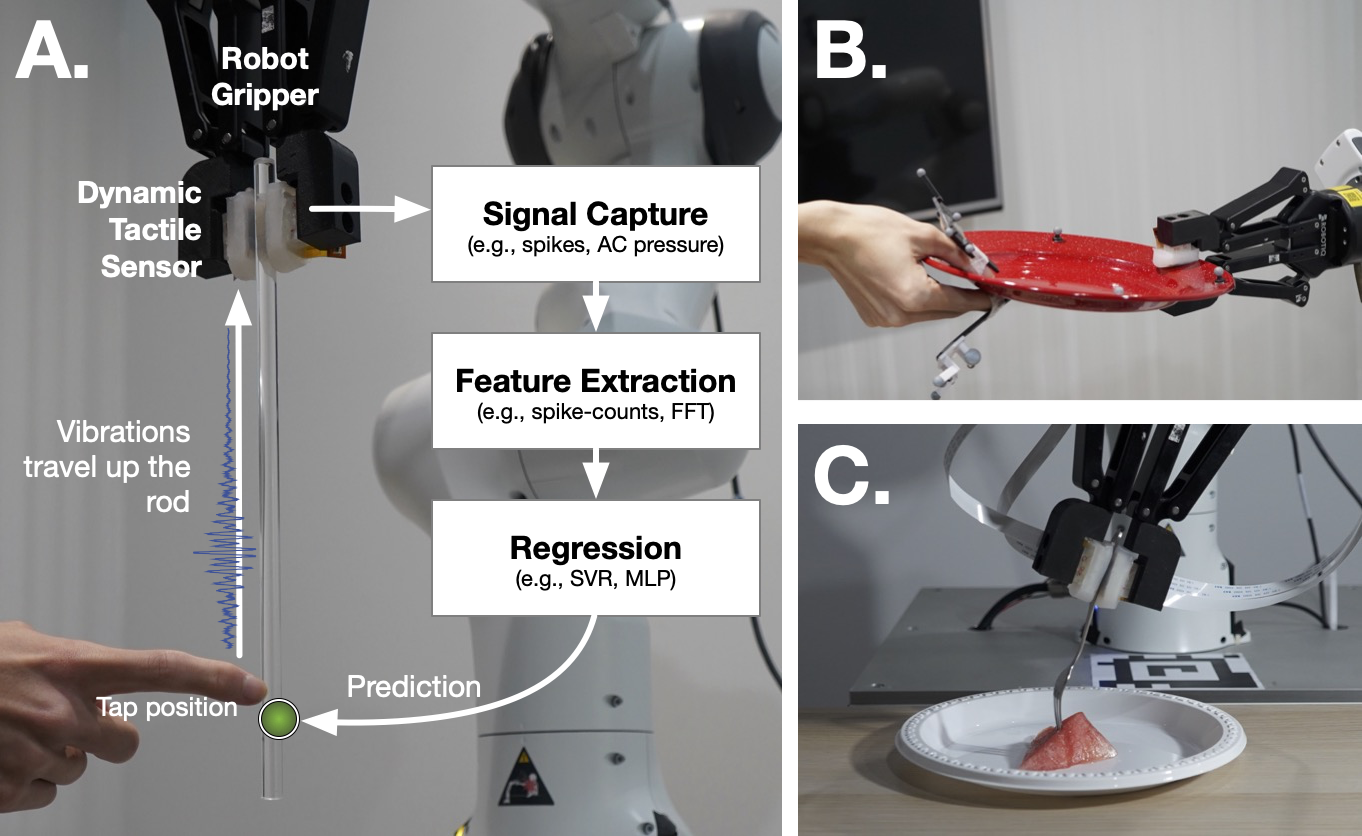

Extended Tactile Perception: Vibration Sensing through Tools and Grasped Objects

[PDF]

Tasbolat Taunyazov★, Luar Shui Song★, Eugene Lim★, Hian Hian See, David Lee, Benjamin C.K. Tee, Harold Soh★

Work in Progress

TL;DR: We show how robots can extend their perception through grasped tools/objects via dynamic tactile sensing.

-

More Links:

[BibTeX]

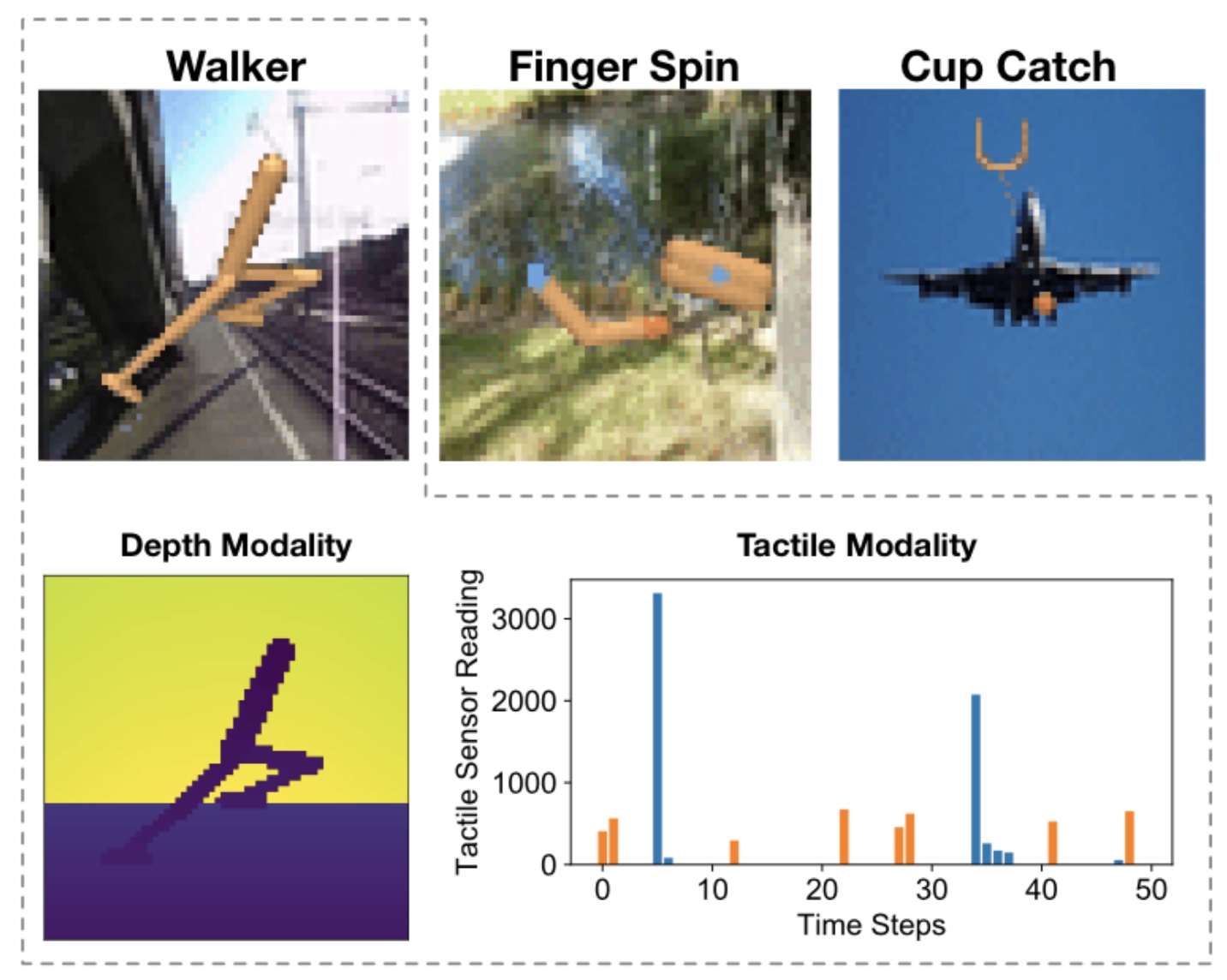

Multi-Modal Mutual Information (MuMMI) Training for Robust Self-Supervised Deep Reinforcement Learning

[PDF]

Kaiqi Chen★, Yong Lee★, and Harold Soh★

IEEE International Conference on Robotics and Automation (ICRA), 2021

TL;DR: We propose new mutual-information-based contrastive losses for multi-modal/sensor learning (e.g., vision, depth, and tactile)

-

More Links:

[BibTeX]

@inproceedings{Chen2021MuMMI,

title={Multi-Modal Mutual Information (MuMMI) Training for Robust Self-Supervised Deep Reinforcement Learning},

author={Kaiqi Chen and Yong Lee and Harold Soh},

year={2021},

booktitle={IEEE International Conference on Robotics and Automation (ICRA)}}

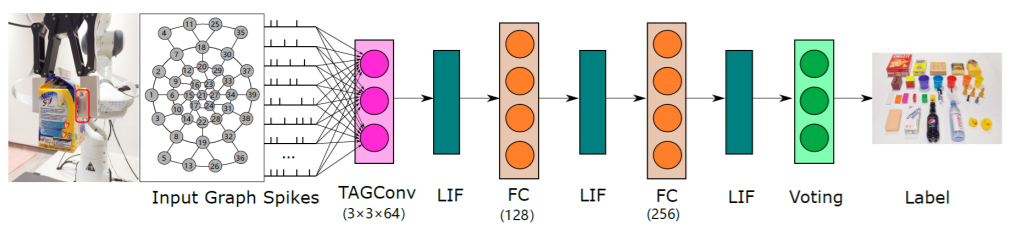

TactileSGNet: A Spiking Graph Neural Network for Event-based Tactile Object Recognition

[PDF]

Fuqiang Gu★, Weicong Sng★, Tasbolat Taunyazov★, and Harold Soh ★

IEEE/RSJ International Conference on Robots and Systems (IROS), 2020

TL;DR: We propose a new spiking graph neural network for recognizing objects by touch using event tactile sensors.

-

More Links:

[BibTeX]

@inproceedings{gu2020tactile,

title={TactileSGNet: A Spiking Graph Neural Network for Event-based Tactile Object Recognition},

author={Fuqiang Gu and Weicong Sng and Tasbolat Taunyazov and Harold Soh},

booktitle = {IEEE/RSJ International Conference on Intelligent Robots and Systems},

year = {2020},

month = {October}}

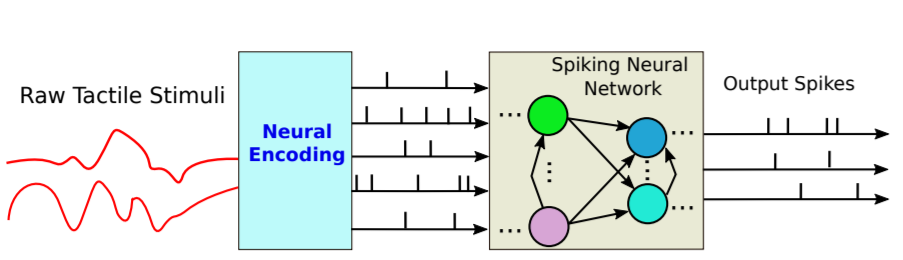

Fast Texture Classification Using Tactile Neural Coding and Spiking Neural Network

[PDF]

Tasbolat Taunyazov★, Yansong Chua, Ruihan Gao, Harold Soh ★, and Yan Wu

IEEE/RSJ International Conference on Robots and Systems (IROS), 2020

TL;DR: We show how texture classification is possible using tactile sensing and spiking neural networks.

-

More Links:

[BibTeX]

@inproceedings{taunyazov2020texture,

title={Fast Texture Classification Using Tactile Neural Coding and Spiking Neural Network},

author={Tasbolat Taunyazov and Yansong Chua and Ruihan Gao and Harold Soh and Yan Wu},

booktitle = {IEEE/RSJ International Conference on Intelligent Robots and Systems},

year = {2020},

month = {October}}

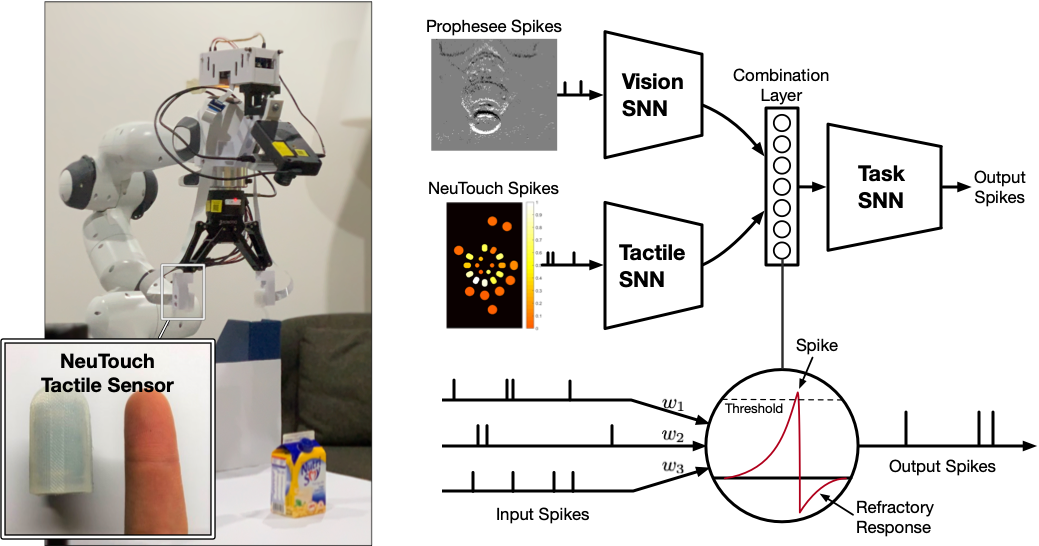

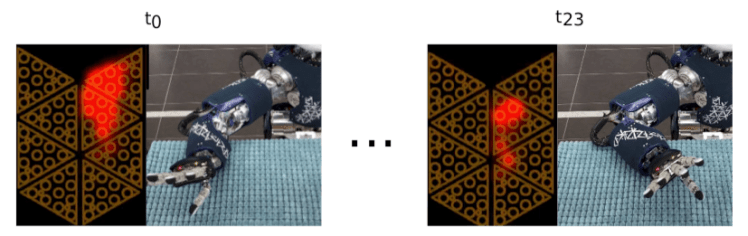

Event-Driven Visual-Tactile Sensing and Learning for Robots

[PDF]

[Blog]

Tasbolat Taunyazov★, Weicong Sng★, Hian Hian See, Brian Lim, Jethro Kuan★, Abdul Fatir Ansari★, Benjamin Tee, and Harold Soh ★

Robotics: Science and Systems Conference (RSS), 2020

TL;DR: We combine event vision and event tactile sensing in a power-efficient perception framework.

-

More Links:

[Paper Site]

[BibTeX]

@inproceedings{taunyazov20event,

title={Event-Driven Visual-Tactile Sensing and Learning for Robots},

author={Tasbolat Taunyazoz and Weicong Sng and Hian Hian See and Brian Lim and Jethro Kuan and

Abdul Fatir Ansari and Benjamin Tee and Harold Soh},

year={2020},

booktitle = {Proceedings of Robotics: Science and Systems},

year = {2020},

month = {July}}

Towards Effective Tactile Identification of Textures using a Hybrid Touch Approach

[PDF]

Tasbolat Taunyazov★, Hui Fang Koh, Yan Wu, Caixia Cai and Harold Soh ★

IEEE International Conference on Robotics and Automation (ICRA), 2019

TL;DR: We show touch motions that comprise touch and slides enable better texture classification.

-

More Links:

[Github]

[BibTeX]

@inproceedings{taunyazov2019towards,

title={Towards effective tactile identification of textures using a hybrid touch approach},

author={Taunyazov, Tasbolat and Koh, Hui Fang and Wu, Yan and Cai, Caixia and Soh, Harold},

booktitle={2019 International Conference on Robotics and Automation (ICRA)},

pages={4269--4275},

year={2019},

organization={IEEE}}

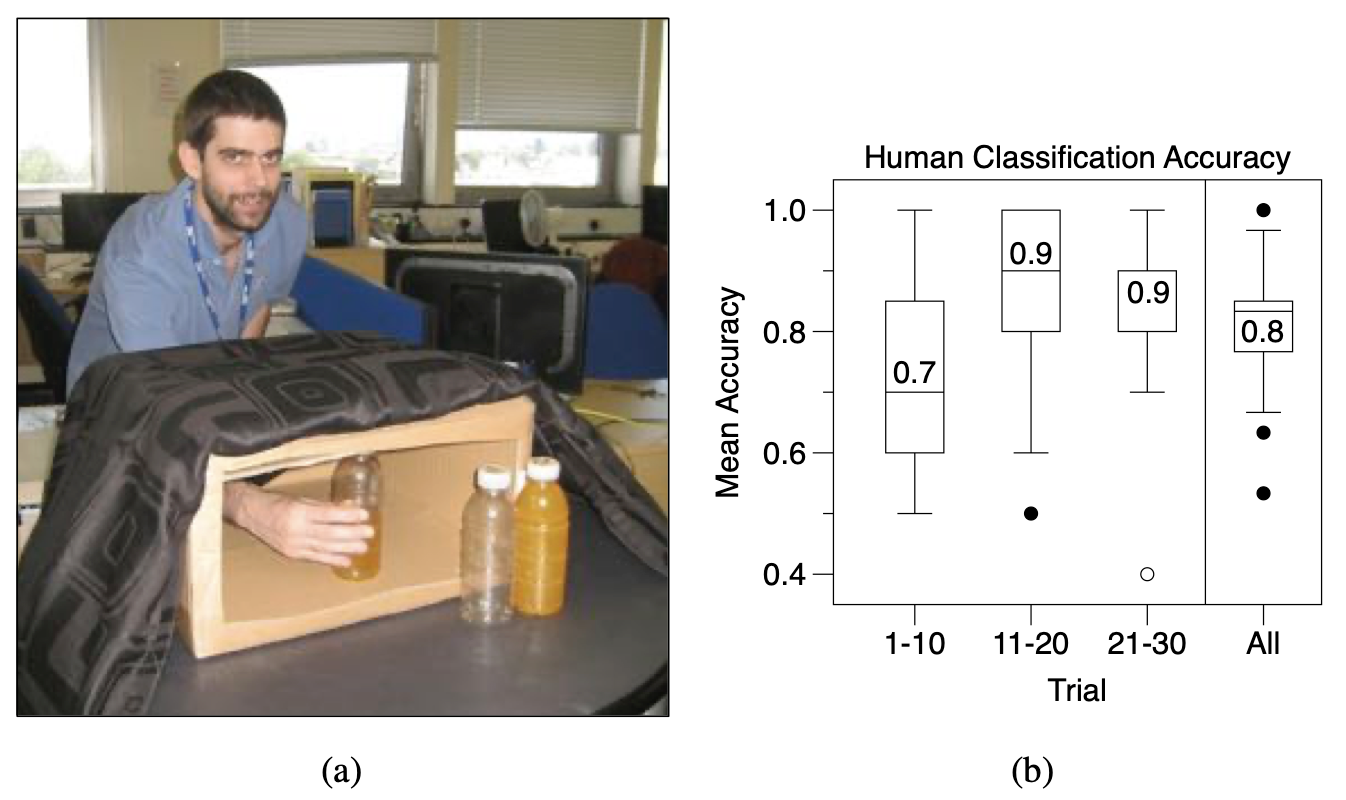

Iteratively Learning Objects by Touch: Online Discriminative and Generative Models for Tactile-based Recognition

[PDF]

Harold Soh and Yiannis Demiris

IEEE Transactions on Haptics, 2014.

TL;DR: Journal version of our IROS 2021 paper on online tactile learning. Includes both generative and discriminative learning with unsupervised online learning.

-

More Links:

[Publisher Link]

[BibTeX]

@article{article,

author = {Soh, Harold and Demiris, Yiannis},

year = {2014},

month = {05},

pages = {512-525},

title = {Incrementally Learning Objects by Touch: Online Discriminative and Generative Models for Tactile-Based Recognition},

volume = {7},

journal = {IEEE Transactions on Haptics},

doi = {10.1109/TOH.2014.2326159}}

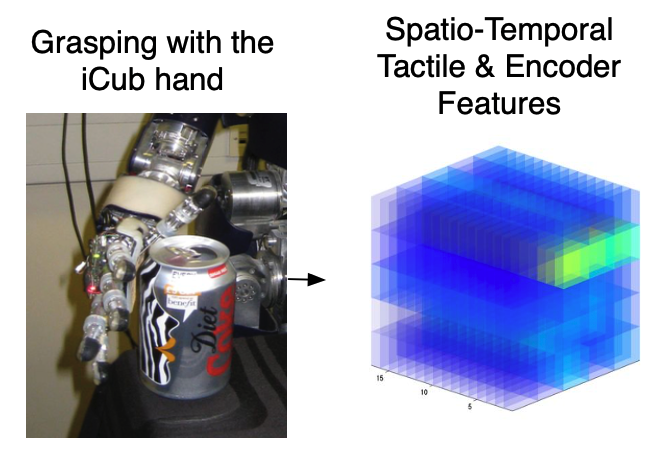

Online Spatio-Temporal Gaussian Process Experts with Application to Tactile Classification

[PDF]

Harold Soh, Yanyu Su and Yiannis Demiris

IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 2012.

TL;DR: We show object classification by touch on the iCub robot can be learned in an online manner

-

More Links:

[Publisher Link]

[Github]

[BibTeX]

@INPROCEEDINGS{soh2012tactile,

author={Soh, Harold and Su, Yanyu and Demiris, Yiannis},

booktitle={2012 IEEE/RSJ International Conference on Intelligent Robots and Systems},

title={Online spatio-temporal Gaussian process experts with application to tactile classification},

year={2012},

volume={},

number={},

pages={4489-4496},

doi={10.1109/IROS.2012.6385992}}